The future is not AI-generated, but AI-assisted—and that distinction matters more than most headlines suggest.

Exploring why the distinction between AI-generated and AI-assisted futures matters for how we build, create, and think about technology's role in human progress.

Just-in latest YC backed AI startup is going to replace “Place a job title” — I’m pretty sure you’ve come across headlines like these. And you might get alarmed about some dystopian future ahead. You are right about the dystopian future part but it won’t come from AI replacing us outright. Rest assured this article was not AI-generated; AI-assisted? For sure.

Before we dive deeper, it’s important to establish some definitions — what do I mean by AI generated and AI-assisted. AI-generated is what people often dismiss as “AI slop”, content that has no input aside from just mere prompts. When I say AI-assisted I’m referring to AI automating certain tasks within existing workflows. And if we look at trends people seem to be enjoying the AI-assisted workflows; it’s not only giving better results but are also accepte by consumers.

The AI bloom has benefitted mid- to senior-level software engineers the most. Instead of writing code the job shifted toward quality assurance: reviewing AI-generated code and fixing AI-generated bugs with more AI generated suggestions. Commit messages are more descriptive now as they are AI generated. Instead of digging stackoverflow, common bugs and also some less reported issues are just fixed with a single prompt. Some tools have markdown copy button now. You can copy error as markdown and paste it in your favourite AI tool and it resolves the issue. No matter how many new tools claim to create complete systems from scratch, human intervention remains mendatory for real-world systems.

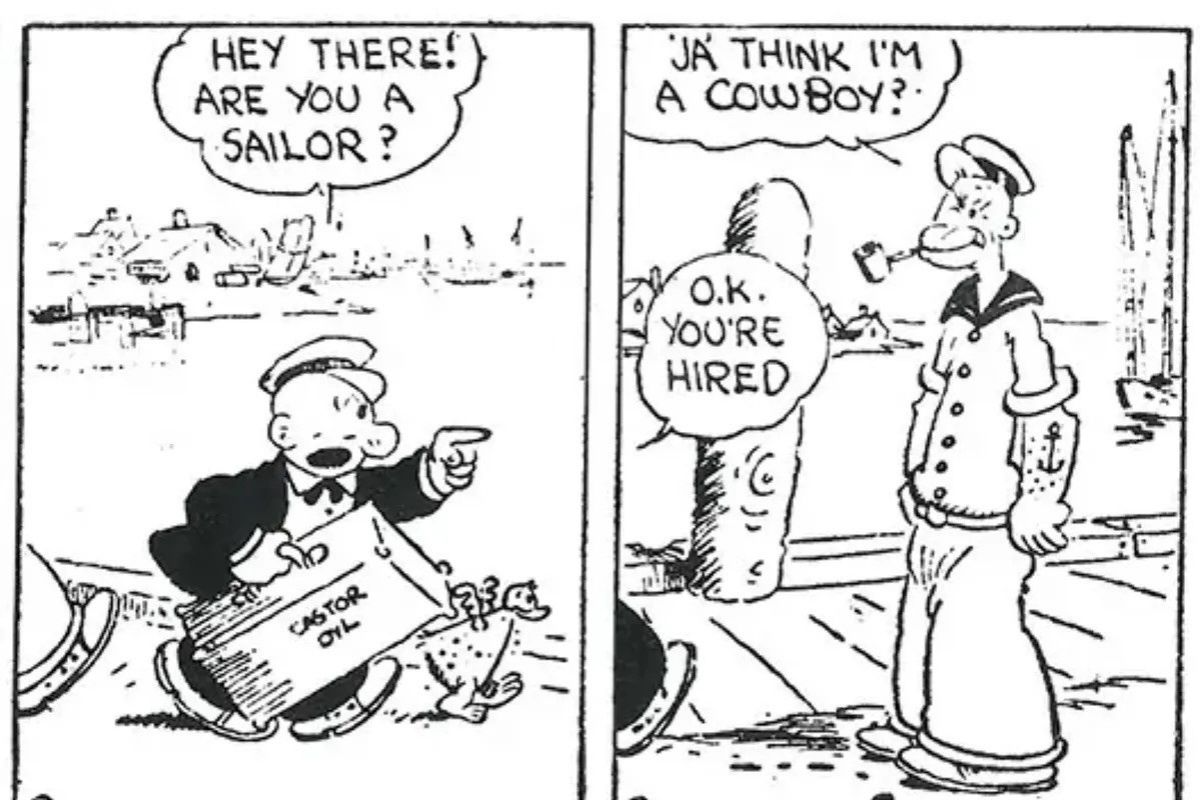

People are mislead in part because tech companies frame AI as a whimsical tool that cannot be explained even though it is explainable. Think of LLM results as sophisticated search engines. Before you searched for indexed web pages, now you can search for the specific bits in your own desired format and length. Remember the funny memes which used to state that all engineers do is copy and paste from other code bases and stackoverflow? AI is just that. The copy pasting has been automated and hence those who only knew copy pasting are getting easily replaced.

How does this translate to other professions? Things will change but not the way most people expect. A century ago when literacy was rare, people were hired just because they could read and write. As literacy spread, people assumed these skills would lose their economic value. It did not change. People still get hired because they know how to read and write, albeit for more complex work. Even though most people know how to read and write, very few people know how to write content worth reading and very few readers know how to read between lines.

These people do use AI tools for research, proof reading and what not. One might argue AI can generate whole paragraphs — AI can for sure. But as regulations increasily require publishers to disclose AI use (steam already does this), an interesting question emerge: will people read an opinon labelled as AI-generated? Already we are seeing this trend where people are avoiding products that use AI generated marketing content, as most of these content look generic, repetitive and may come across as scams. This does give hope.

But I wouldn’t harbour optimism very tightly, as I recently came across this news about one Sri Lankan man who reportedly made hundreds of thousands of dollars by making AI-generated propaganda videos catering towards far-right audiences in the UK. Apart from all the things AI can remove, human intent is something it cannot - what it can do is amplify that intent, for better or for worse.